Intel Details PowerVia Chipmaking Tech: Backside Power Performing Well, On Schedule For 2024

2023-06-05At next week’s annual VLSI Symposium, Intel will be presenting a trio of highly-anticipated papers about their progress with their upcoming PowerVia chip fabrication technology – the company’s in-development implementation of backside power delivery networks. Along with Intel’s RibbonFET technology for gate-all-around transistors, PowerVia and RibbonFET are slated to serve as Intel’s big one-two punch to the rest of the silicon lithography industry, introducing two major chip technologies together that Intel believes will vault them back into the fab leadership position. Combined, the two technologies are going to be the backbone of Intel’s “angstrom” era fab nodes, which will go into high volume manufacturing next year, making Intel’s progress with the new technologies a subject of great importance both inside and outside of the company – and one which Intel wants to address.

When it comes to Intel’s chip manufacturing technology, the stakes for Intel’s R&D groups couldn’t be any higher than they are right now. The long-time leader of the fab would has faulted – repeatedly – and is now in the middle of a multi-year effort to course-correct, not only to get back that leadership position which they’ve lost, but to break into the contract chip manufacturing business in a big way.

As a result, while VLSI research papers do not normally attract a ton of outside attention, this year in particular is a big exception. With RibbonFET and PowerVia set to go into production next year, Intel is reaching the point where they’re wrapping up R&D work on the first generation of those technologies. Intel is now at the point where, to their peers in the VLSI industry, they can present their first findings around producing a complex logic test chip. And to Intel’s investors and other outsiders, Intel can show the first real evidence that their efforts to get back on track may very well be succeeding, giving Intel an opening to leap ahead of the competition that the company greatly needs.

To that end, at next week’s symposium Intel is going to be disclosing a great deal of information around their implementation of backside power delivery network technology, which they call PowerVia. Central to these papers is Blue Sky Creek, an Intel “product-like” logic test chip that implements backside power delivery on the EUV-enabled Intel 4 process technology. With Blue Sky Creek, Intel intends to demonstrate that not only do they have PowerVia working with a CPU on time for high volume manufacturing next year, but that the performance and manufacturing benefits of backside power delivery are everything Intel has promised. Suffice it to say, Intel is preparing for this year’s VLSI conference to be a very big moment for the company.

Backgrounder: Backside Power Delivery

Backside power delivery networks (BSP/BS-PDN) is a technology concept that has been quietly in development for the past several years across the entire chip fab industry. Similar to EUV in recent fab nodes, BS-PDN is seen as an essential technology to continue developing ever-finer process node technologies, and as a result, it’s expected that all leading-edge chip fabs will move to the technology in the future.

With that said, they are not all moving there at the same time. Intel expects to be first of the big three chip fabs to productize the technology, bringing it into chips at least two years ahead of its competitors. As a result, Intel is very much the pathfinder for the technology, which comes with no shortage of risks – but it also comes with significant rewards for getting the technology (and their timing) correct. For Intel, the company believes that this will be their new FinFET moment – a reference to Intel’s introduction of FinFETs on 22nm in 2012, which cemented Intel’s lead in the market for several years. This is particularly important as Intel won’t have a lead over its competitors when it comes to gate-all-around FETs (GAAFET) timing, so BS-PDN/PowerVia is going to be Intel’s trump card in the fab space for the next few years.

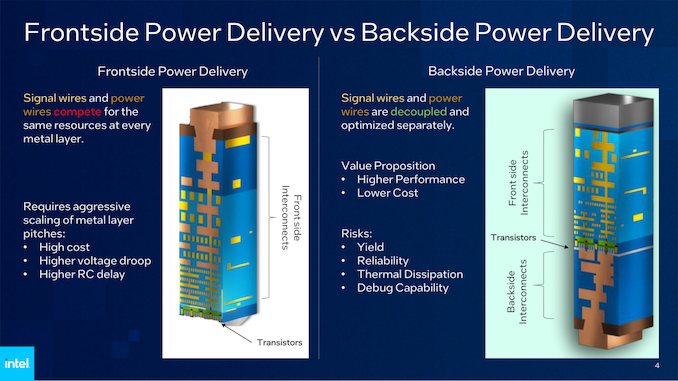

Meanwhile, to fully understand how BS-PDN works, the best place to start is to recap how traditional (frontside) power delivery works, so that we can contrast that to the many changes that come with building a BS-PDN wafer.

The manufacturing process of a modern, frontside power delivery chip starts with etching the transistor layer, M0. This is the smallest and most intricate layer on a die, and is where high-precision tools like EUV and multi-patterning are needed the most. In short, it’s the most expensive and complex layer of the chip, which has significant ramifications for both how chips are constructed and how they are tested.

Above that, additional metal layers are added at increasing sizes to account for all the wiring needed between the transistors and different parts of the processor (cache, buffers, accelerators), as well as providing routing for power that comes in from even further up the stack. Intel likens this akin to building a pizza, which is a crude analogy, but effective.

A modern high-performance processor typically has anywhere from 10 to 20 metal layers in its design. With the Intel 4 process in particular, there are 16 layers for logic, ranging from a pitch of 30 nm up to 280 nm. And then there’s another two “giant metal” layers on top of that just for power routing and placing external connectors.

Once a die has been fully fabbed and polished, the die is then flipped over (making this a flip chip) so that the chip can talk to the outside world. This flip places all the connectors (power and data) at the now-bottom of the chip, while the transistors end up at the top of the chip. Once flip chip manufacturing was mastered, this made chip debugging and cooling especially convenient, as it allowed debugging tools easy access to the all-important transistor layer. Meanwhile those hot little gates also became especially close to the chip’s cooler, allowing for the relatively easy transfer of heat out and away from a chip.

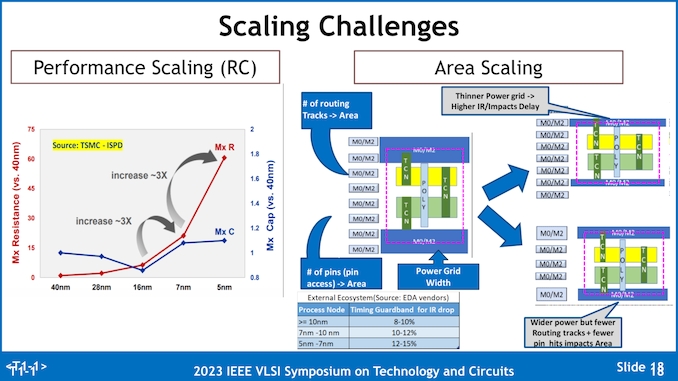

The drawback with frontside power delivery, however, is that it means both power and signal wires are on the same side of the chip. Both wires have to take a significant trip down through 15+ layers to reach the transistors, all the while competing for valuable space and creating interference with each other. For the power wires in particular, this is especially a nuisance, as the resistance along these wires causes the voltage of the incoming power to drop, which is known as the IR Drop/Droop effect.

For most of the history of chip fabbing, this hasn’t been a huge issue. But like so many other aspects of chip building, the problem has become more and more pronounced as chip feature sizes have shrunk. There is no obvious hard limit here on frontside power delivery, but given how much harder it is to shrink chips with every successive generation, the problem has become too big (or rather, too expensive) to work around.

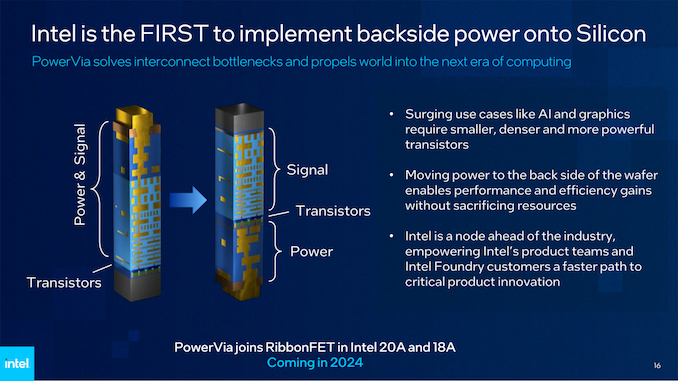

And that brings us to backside power delivery. If having both signals and power coming in on the same side of the chip is causing problems, why not separate the two? This, in a nutshell, is exactly what backside power delivery looks to resolve, by moving all of the power connections to the other side of the wafer.

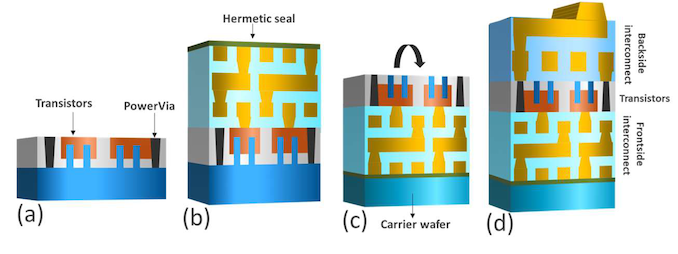

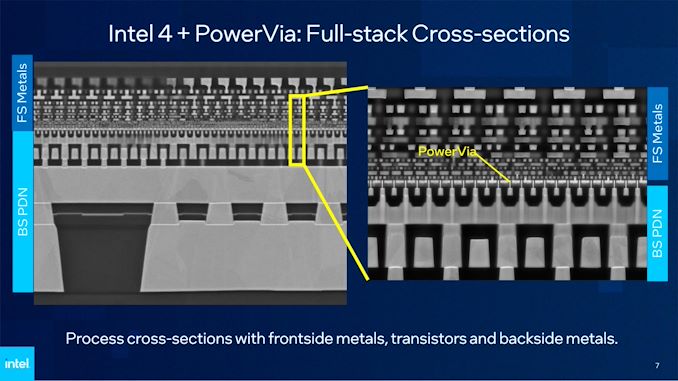

For Intel’s PowerVia implementation of this concept, Intel quite literally flips a wafer upside down, and polishes away almost all of the remaining silicon until they reach the bottom of the transistor layer. At that point, Intel then builds the metal layers for power delivery on the opposite side of the chip, similar to how they would have previously built them on the front side of the chip. The net result is that Intel ends up with what’s essentially a double-sided chip, with power delivery on one side and signaling on the other.

On paper (and in Intel’s paper), there are ultimately several benefits to moving to BS-PDN. First and foremost, this has a meaningful impact on simplifying the construction of a chip. We’ll get to Intel’s specific claims and findings in a bit, but of particular note here is that it allowed Intel to relax the density of their M0 metal layer. Rather than requiring a 30 nm pitch on Intel 4, Intel’s test node for Intel 4 + PowerVia allowed for a 36 nm pitch. That directly simplifies the most complex and expensive processing step of the entire chip, rolling it back to something closer in size to the Intel 7 process.

BS-PDN is also primed to deliver some modest performance improvements to chips as well. Shortening the route for power delivery to the transistors with something more direct helps to counteract the IR Droop effect, resulting in better power delivery to the transistor layer. And getting all of those power delivery wires out of the signal layers improves the performance of those as well, eliminating the power interference and giving chip designers more room to optimize their designs.

Otherwise, the trade-off for all of this is primarily in losing the previously mentioned perks of building a frontside chip. The transistor layer is now roughly in the middle of the chip, rather than at the end. This means that traditional debugging tools can’t directly poke the transistor layer of a completed chip to test it, and there is now 15 or so layers of signal wires between the transistor layer and the cooling service. These aren’t insurmountable challenges, as Intel’s paper takes care to lay out, but were issues that Intel had to work around in their design.

Manufacturability is the other set of trade-offs involved in moving to BS-PDN. Building power layers on the backside of a die is something that’s never been done before, which increases the chance of something going wrong. So not only does power delivery need to work, but it needs to work without significantly reducing chip yields or otherwise making chips less reliable. But if all of these things pan out, then the extra effort of building power layers on the backside of a wafer are more than cancelled out by the time and cost savings of not having to route power in through the front side.

Intel’s Added Wrinkles: Carrier Wafers & Nano TSVs

Because backside power delivery networks are going to become an industry standard feature in due time, we’ve tried not to focus too much on Intel’s specific implementation of BS-PDN/PowerVia. But now that we’ve hit the fundamentals of BS-PDN, there are a couple of Intel-specific implementation details worth noting.

First and foremost, Intel is using a carrier wafer as part of their construction process in order to provide chip rigidity. Bonded to the front side of a PowerVia wafer after the fabrication of that side is completed, the carrier wafer is a dummy wafer to help support the chip while Intel is blasting away at the other side. Because the two-sided chipmaking process polishes away so much of the remaining silicon wafer, there isn’t much structural silicon to hold the whole thing together. As Intel lightly jokes, despite this being silicon lithography, there’s only a tiny amount of silicon left on the wafer by the end.

That carrier wafer, in turn, remains as part of the chip for the rest of its life. Once chip fabbing is completed, Intel can polish the bonded carrier wafer down to the necessary thickness. Of note, because the carrier wafer is on the signal side of the chip, this means it presents another layer of material between the transistors and the cooler. Intel’s techniques to improve heat transfer take this into account, but for PC enthusiasts accustomed to transistors at the top of their chip, this is going to be a significant change.

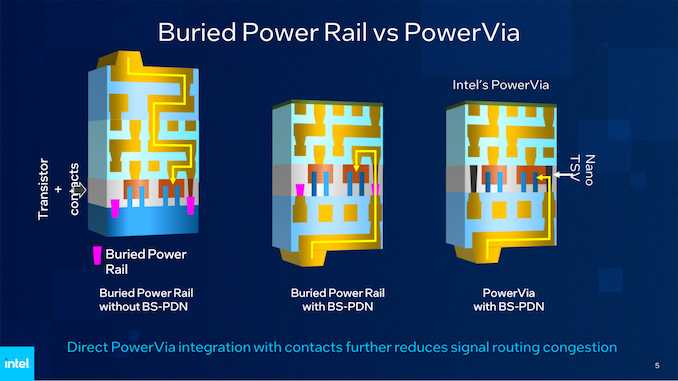

Intel’s other notable detail with their implementation of BS-PDNs is the use of TSVs for power routing. In PowerVia, there are nano-scale TSVs (aptly named Nano TSVs) in the transistor layer of the chip. This is in contrast to buried power rails, which is what industry pioneer IMEC has been looking into with their BS-PDNs.

The long and short of matters is that while power rails still required going up-and-over the transistor layer to deliver power, the use of TSVs allows power to be delivered more directly to the transistor layer. For Intel this is a technological advantage that they’re keen to exploit, as it avoids having to design and build-in the routing required for buried power rails.

Blue Sky Creek: An Intel 4 + PowerVia Test Chip, Based on Crestmont E-Cores

To put PowerVia to the test and to prove that it’s working as expected, Intel’s validation vehicle for the technology is a chip they’re calling Blue Sky Creek.

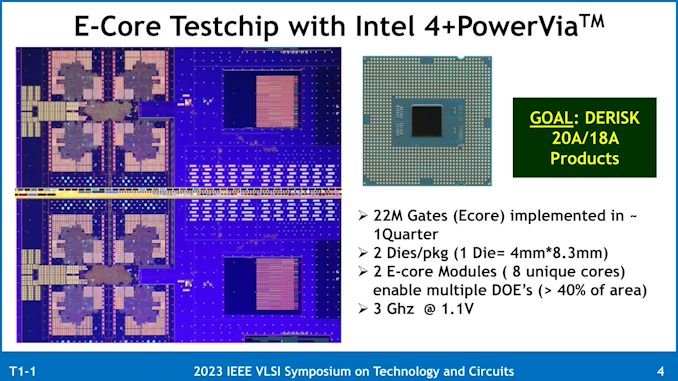

Derived from Intel’s Meteor Lake platform, Blue Sky Creek uses two dies of E-cores that are based on Intel’s Crestmont CPU architecture. The reason Intel is using Crestmont here is two-fold: first, it was originally designed for Intel 4, making it a good candidate for porting over to the Intel 4 + PowerVia process. And second, because E-cores are tiny; the quad-core test dies are just 33.2mm2 (4mm x 8.3mm) in size, making them a good balance between complexity for testing and not having to actually yield a massive die on an experimental process node.

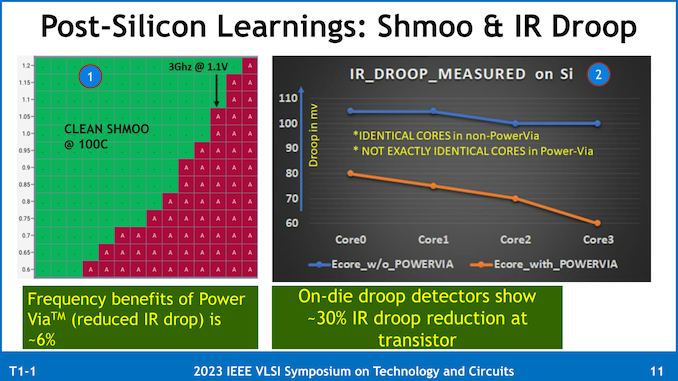

Intel isn’t focused too much on the performance of the E-cores in Blue Sky Creek. But they do note that the designed frequency was 3GHz at 1.1v.

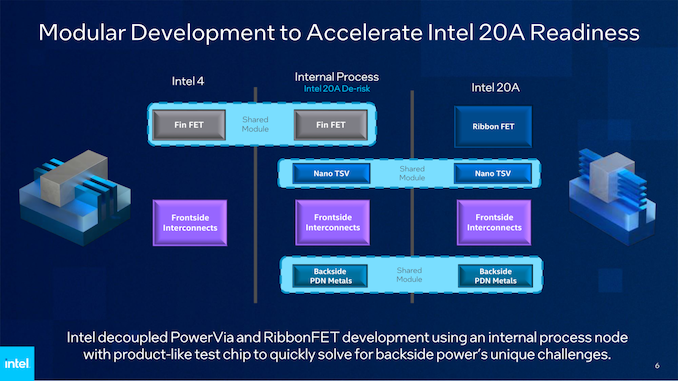

With regards to the bigger picture, Big Sky Creek’s existence is part of Intel’s grand plan to mitigate the risk of introducing two major technological changes within the same process node – a problem that hit Intel rather hard with the overly-ambitious development plan for their 10nm (Intel 7) process node.

As outlined by the company back in 2022, of the two technologies, PowerVia was considered the riskier of the two. For that reason, Intel would be developing an interim test node just for PowerVia, allowing them to work on the technology separately from RibbonFETs. Ultimately, if PowerVia development had not progressed as expected, Intel could still do RibbonFET development, and bring out chips sans-PowerVia.

For similar reasons, you won’t find much discussion of RibbonFET from Intel for the VLSI conference. While it’s an important technology for the company, it’s essentially a sure-thing for Intel. The company won’t even be the first fab with GAAFET technology, so while RibbonFET does come with its own challenges, intel didn’t need to prepare any risk mitigation strategies for it in the same way as they did PowerVia.

PowerVia In Practice: Intel’s Finds 30% Reduction in IR Droop, 6% Higher Clockspeeds, Ready for HVM

Diving into the results of Intel’s PowerVia papers, the company has broken out their results for Blue Sky Creek on a metric-by-metric basis, looking at everything from chip density to performance to thermals.

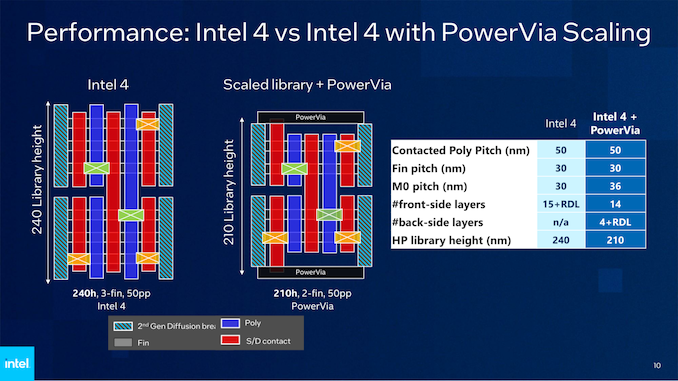

Starting with chip density, here’s a look at the size of the high performance (HP) library cells that Intel developed and fabbed for their test chip, and how those cells compare to the equivalent Intel 4 cells. As noted earlier, the shift to PowerVia has allowed Intel to relax the pitch of the critical M0 metal layer, enlarging it from 30 nm to 36 nm. The other major parameters of the node, such as the fin pitch and contacted poly pitch (CPP) remain at 30 nm and 50 nm respectively.

Yet, those changes, combined with Intel’s ability to reduce the number of fins in use from 3 to 2, result in an overall smaller cell. Thanks to PowerVia, Intel was able to bring the library height down to 210 nm, from 240 nm on Intel 4. And with the CPP holding constant at 50 nm, that means the overall cell size has been reduced by 12.5%, despite not using a formally “denser” node.

Meanwhile, this disclosure also gives us a proper look at the total number of layers involved in the two chips. Whereas a standard Intel 4 chip will have 15 layers plus the redistribution layer (RDL) all on one side, the PowerVia test chip had 14 layers on the signal (front) side, and another 4 layers plus the RDL on the power (back) side. That is a net gain of 3 layers overall, so at least as far as Intel’s test chip goes, it’s more complex in terms of the total number of layers used. But on the flip side, all of those new layers come on the power side of the chip, where they’re all relatively large and easy to fab. Which is why Intel considers the additional layers a minimal drawback compared to not having to manufacture a 30 nm pitch M0 layer.

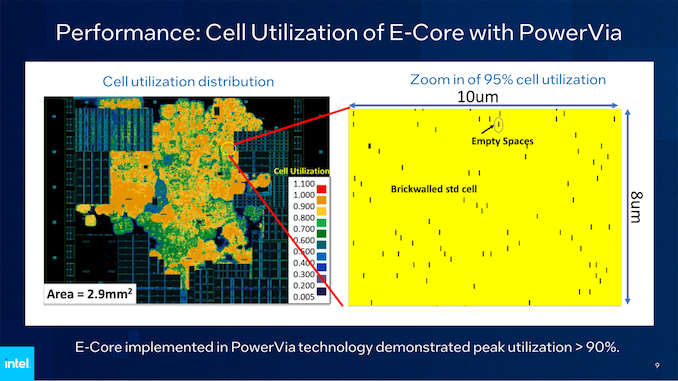

The density of these cells is also quite impressive. By moving to backside power delivery, Intel was able to utilize 95% of the space within one of the denser spots within the E-core cell. Unfortunately, Intel didn’t give comparable numbers for E-cores on Intel 4, but in general, the utilization is not quite that high.

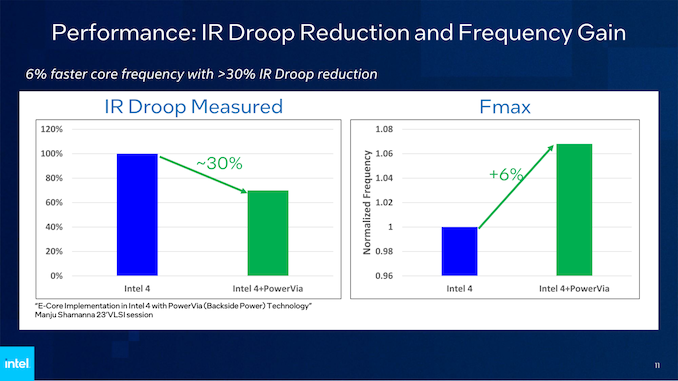

As for the electrical performance of the E-cores, as expected, PowerVia delivers a significant reduction in IR Doop. Intel measured a 30% reduction in droop on their test chip as compared to an Intel 4-built E-core, and an even greater 80% (5x) reduction in droop at the actual chip bump level.

That clean power delivery, in turn, improves the compute performance and the energy efficiency of the chip. Besides the direct efficiency gains from losing less energy to resistance, constructing an E-core with PowerVia technology improved the maximum frequency (fMax) of the core by 6%. Intel doesn’t offer a more detailed explanation as to why this improved their clockspeeds, but I suspect it has to do with a higher voltage actually reaching the transistors themselves, allowing them to clock a bit higher.

And while a 6% clockspeed uplift isn’t a massive gain, it’s essentially a “free” improvement coming from a technology that is designed to improve the manufacturability of a chip. Intel has worked harder to get smaller clockspeed improvements in the past.

Interestingly, Intel did find that the new IR Droop values were not the same across all of the E-cores. Whereas the standard Intel 4 chip had a fairly consistent droop over all 4 cores, the droop for the test chip was between 60 mV and 80 mV, depending on the core. As this is a research presentation, Intel doesn’t go into the product ramifications of that, but assuming that production chips have a similarly wide range of variability, it may mean we see a greater emphasis on favored/prime cores in future products.

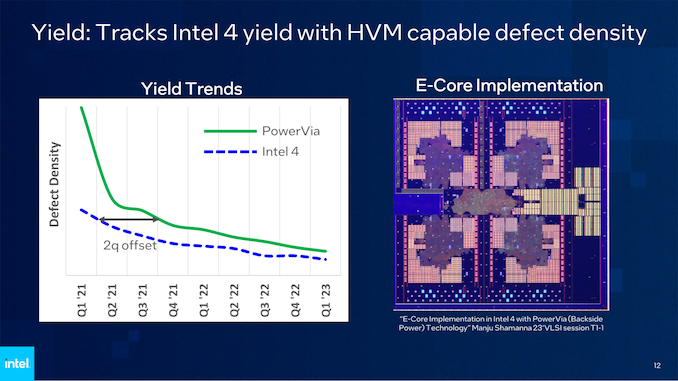

So PowerVia/BS-PDN works. But can Intel manufacture it at scale? The answer to that is also yes.

Accord to Intel, the defect density for their experimental Intel 4 + PowerVia process node is only about two quarters behind the Intel 4 process itself (which is already in HVM). Meaning that not only is the defect density low enough to be suitable for mass production in short order, but if Intel were scaling this specific node up for production, they would have similar chip yields as current, non-PowerVia Intel 4 chips in just two quarters.

Similarly, Intel reports that transistors built on the PowerVia process are very similar to those of the Intel 4 process. Which in this case, is very much a good thing, as it means those transistors aren’t doing unexpected things that impact performance or chip reliability. For that matter, Intel also noted that they observed no power-related reliability violations on the test chip, demonstrating that despite the significant changes to power delivery, the introduction of backside power delivery did not result in a less reliable power delivery system for the chip.

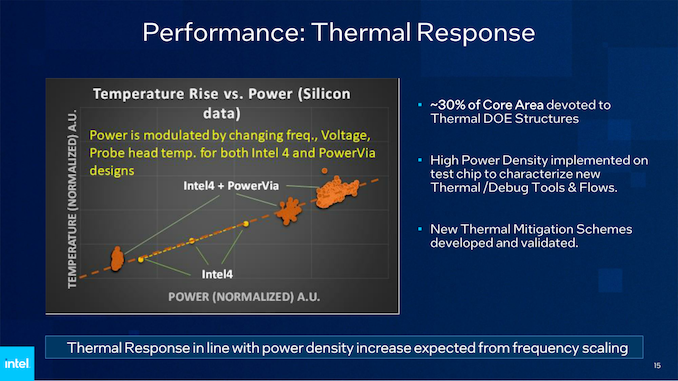

And while Intel isn’t going into the exact measures they’re employing to mitigate the thermal impacts of the transistor layer no longer being at the top of a flipped chip, the company is publishing data showing that the mitigations they have undertaken have been successful.

When plotted against the thermal response curve for an Intel 4 chip, Intel’s test chip demonstrated a very similar thermal response curve. Which is to say that at a given power level, the resulting temperatures were in-line with an Intel 4 chip. And as you may notice from Intel’s chart, they even went ahead and overfed the chip a bit to simulate even higher thermal loads, to better characterize the thermal response curve and to make sure thermal scaling wasn’t breaking down at higher thermal densities.

As noted earlier, thermals/cooling are one of the two big product-level challenges with backside power delivery. Placing transistors in what’s become the middle of the chip has not done chip cooling any favors, but Intel believes they have developed the necessary mitigation strategies to bring PowerVia chips in line with traditional frontside-fabbed flip chips.

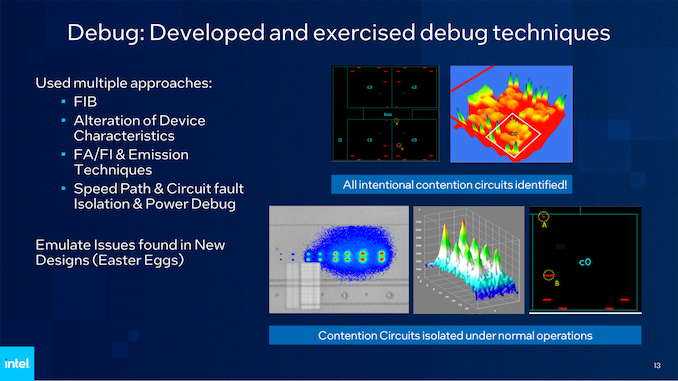

Those mitigations go for debugging, as well, which was the other challenge with BS-PDN. Intel has developed a suite of new and modified debugging techniques to deal with the fact that both sides of a chip are now in use. And while, to quote the abstract of Intel’s paper “Failure debug and defect isolation is complex due to the presence of metallization on both sides of the wafer,” ultimately, Intel was able to develop the necessary tools.

Interestingly, Intel even placed some “Easter Egg” defects within the chip’s design in order to provide some semi-controlled defects for Intel’s validation teams to work against. According to Intel their validation teams found all of those eggs with their PowerVia debugging tools, helping to prove the validity of those debug processes.

PowerVia To Land in Intel Chips in 2024

Wrapping things up, ahead of next week’s VLSI conference Intel is laying out a very convincing case that they are on the right track with their PowerVia backside power delivery technology development. Having a complex test chip up and running is a big deal in this regard, as having actual data and experience to draw from is a major step towards fine-tuning the technology for high volume manufacturing, all the while showcasing that Intel is so far meeting their aggressive fab development goals.

Ultimately, PowerVia is perhaps the single biggest make-or-break moment for Intel in terms of fully recovering momentum and potentially retaking leadership within the silicon lithography business. If Intel can deliver on its promises, the company is expecting to be at least two years ahead of TSMC and Samsung in deploying backside power delivery – and that means at least two years of reaping the cost and performance benefits of the technology. TSMC for its part is not expecting to deploy backside power until its N2P node in late 2026 or early 2027, while it remains unclear when Samsung will make their own transition.

As for Intel, if everything continues to go to plan, Intel will begin high volume manufacturing with PowerVia in 2024, when the company is scheduled to bring both its Intel 20A and Intel 18A processes online. The first consumer processor to launch using the technology will be Intel’s Arrow Lake architecture, which will be a future generation Core product built on the 20A node.